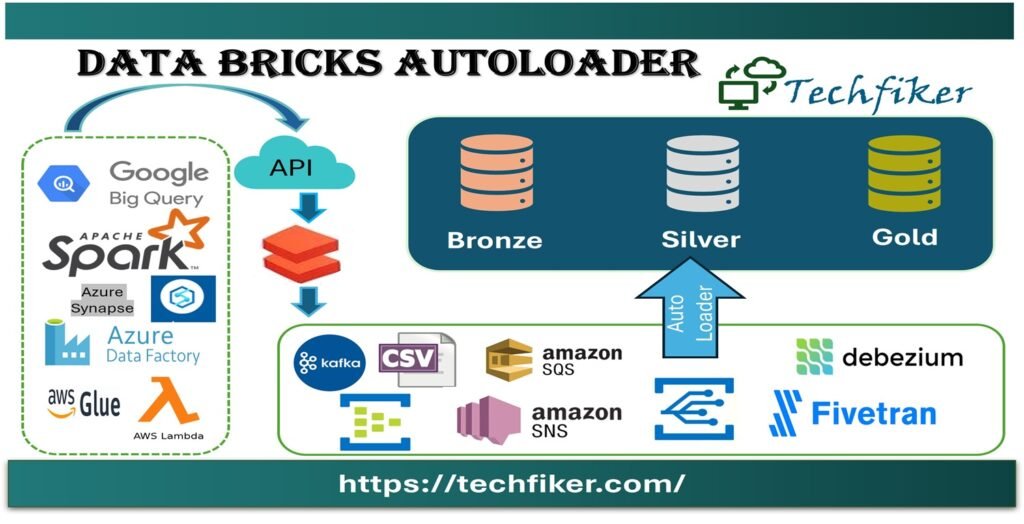

Databricks Auto Loader simplifies data ingestion by automatically detecting, processing, and loading new data files from cloud storage, reducing manual effort and increasing productivity.

Databricks Auto Loader supports loading data from the following cloud storage platforms:

- AWS S3

- Azure Data Lake Storage Gen2

- Azure Blob Storage

- ADLS Gen1

- Google Cloud Storage

- Databricks File System

Databricks Auto Loader also supports ingesting data in the following file formats:

- JSON

- CSV

- PARQUET

- AVRO

- ORC

- TEXT

- BINARY FILE

CloudFiles, a Structured Streaming source offered by Databricks Auto Loader, automatically processes new files as they enter a configurable input directory. Python and SQL are supported by Databricks Auto Loader in Delta Live Tables.

To ingest data in cloud storage, we can process billions of files using Databricks Auto Loader. Auto Loader grows to accommodate millions of files being ingested every hour in almost real-time.

By writing a few lines of declarative Python or SQL, we can use Databricks Auto Loader to incrementally ingest data into Delta Live Tables.

Databricks Auto Loader Features

- Processing new data files as they enter cloud storage in an incremental and effective manner

- Enabling event-driven ingestion through File Notification mode

- Automatically determining the schema of incoming files. Use Schema Hints to superimpose.

- Automatically adapt to changes in data schema.

- Data rescue for columns.

Databricks Auto Loader Syntax for DLT

Databricks Auto Loader supports Python and SQL syntax in Delta Live Tables, making it easy to integrate into your data workflows.

Python Syntax for Databricks Auto Loader:

This example demonstrates how to use Databricks Auto Loader to create a dataset from a CSV file using Python syntax.

@dlt.table

def Products():

return (

spark.readStream.format(“cloudFiles”)

.option(“cloudFiles.format”, “csv”)

.load(“/dbc-ds/Masters/Products/”)

)

We can use the Schema to define the columns manually in a declarative statement:

@dlt.table

def Products():

return (

spark.readStream.format(“cloudFiles”)

.schema(“id INT, ProductName STRING, Description

STRING, text STRING”)

.option(“cloudFiles.format”, “parquet”)

.load(“/dbc-ds/Masters/data-001/Products-parquet”)

)SQL Syntax for Databricks Auto Loader:

This example demonstrates how to use Databricks Auto Loader to create a dataset from a CSV file using SQL syntax.

CREATE OR REFRESH STREAMING LIVE TABLE Products

AS SELECT * FROM cloud_files(“/dbc-ds/Masters/Products/”, “csv”)

We can use the Schema to define the columns manually in a declarative statement:

CREATE OR REFRESH STREAMING LIVE TABLE Products

AS SELECT *

FROM cloud_files(

/dbc-ds/Masters/data-001/Product-parquet”,

“parquet”,

map(“schema”, “id INT, ProductName STRING, Description STRING, text STRING”)

)

You may like to read other blocks i.e.